I harbor no fear of artificial intelligence, nor do I hold it in high esteem. What I experience regarding the emergence of intelligent systems in healthcare is a more subdued sensation: a restrained confidence, a calculated optimism. I acknowledge their vast possibilities, yet I remain steadfast in the conviction that the most profound work in medicine occurs not through automation, but through human relationships. There exists a subtle strength that resides between symptom and narrative, between figures and subtleties. It is within these quiet moments that medicine flourishes. This is an aspect no machine can completely substitute.

Like many healthcare professionals today, I have witnessed the growing incorporation of digital resources into our practice. Intelligent platforms now create note templates, provide differential recommendations, and identify high-risk patients. They respond rapidly and reveal trends with remarkable accuracy; however, their effectiveness relies solely on the quality of the input—on the prompt, the inquiry, the context. These systems are unaware of what is significant unless we instruct them. In this regard, prompting transforms into a type of clinical dialogue. Similar to a patient history, it uncovers not only what is queried but also how meticulously and purposefully we have been trained to listen.

At times, I too have hesitated when a suggestion appeared algorithmically correct but felt intuitively off.

Nevertheless, there exist boundaries that no model can transcend. A machine cannot perceive the quiver in a patient’s voice. It cannot interpret that a daughter’s silence may convey more dread than words could express. It does not sense the ethical burden of deciding when to speak and when to simply offer presence. Computational systems are programmed to identify patterns; physicians, conversely, are educated to embrace paradox. Modern medicine necessitates both.

I endorse the incorporation of intelligent systems in healthcare—not because I believe they are flawless, but because I acknowledge their incompleteness. Incomplete entities necessitate shaping. Too frequently, the technologies that integrate into our clinical environments are developed distant from the actualities of patient care. Predictive models and decision-support mechanisms are introduced without ample clinical input in their creation, validation, or application. The outcome is a system intended to assist physicians yet constructed with scant input from those aware of the implications of its success or failure.

I’ve observed peers questioning the conclusions of tools they had no role in creating. It’s an unease that persists, even when the results are accurate.

When the stakes rise, exclusion is not benign—it is perilous. I have witnessed clinical instruments fail precisely because they were designed without grasping the intricacies of patient presentations or the subtleties of clinical judgment. Well-intentioned algorithms can inflict harm if they disregard the lived experiences of those in practice.

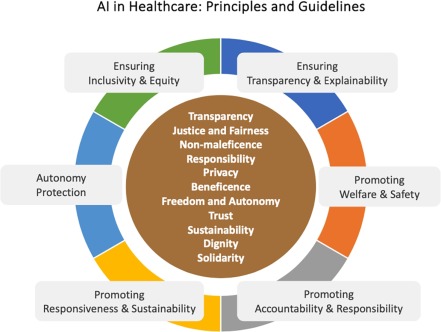

Physicians should not passively receive these tools. We must actively engage in the framework that shapes them—participating in model design, data management, product enhancement, and ethical oversight. When clinicians are involved in the development phase, we contribute more than expertise. We add judgment, context, and a deep understanding of what is at risk when systems falter. The values embedded within these tools will always echo the priorities of their creators. If clinicians are absent from that dialogue, the complexities of care are also excluded.

Furthermore, diversity in this shaping process is essential—it is vital. Clinicians from marginalized backgrounds, from multilingual communities, and from underserved environments provide viewpoints frequently absent from both data and design sessions. Their engagement guarantees that the systems we develop do not merely mirror the majority but encompass the entire range of human experience. When we engage, we do not just “represent”; we recalibrate. We remind the system that not every patient communicates in textbook English, that not every case adheres to guidelines, and that not every human story slots neatly into a clinical framework.

These tools are listening—but to the voices they’ve been trained to recognize, and to those brave enough to express themselves with purpose.

Thus, I have faith in the potential of intelligent systems. I believe in their ability to assist us, to alleviate burdens, to enhance insight. However, I hold an even stronger belief in clinical acumen, in moral creativity, and in the quiet judgments made by individuals who realize that medicine is not merely a precision science—it is an act of presence.

Let the machine aid.

Let the mind stay ours.

Our voices are required now—before the algorithms act on their own.

Shanice Spence-Miller is a resident in internal medicine.