We’ve all witnessed the buzz.

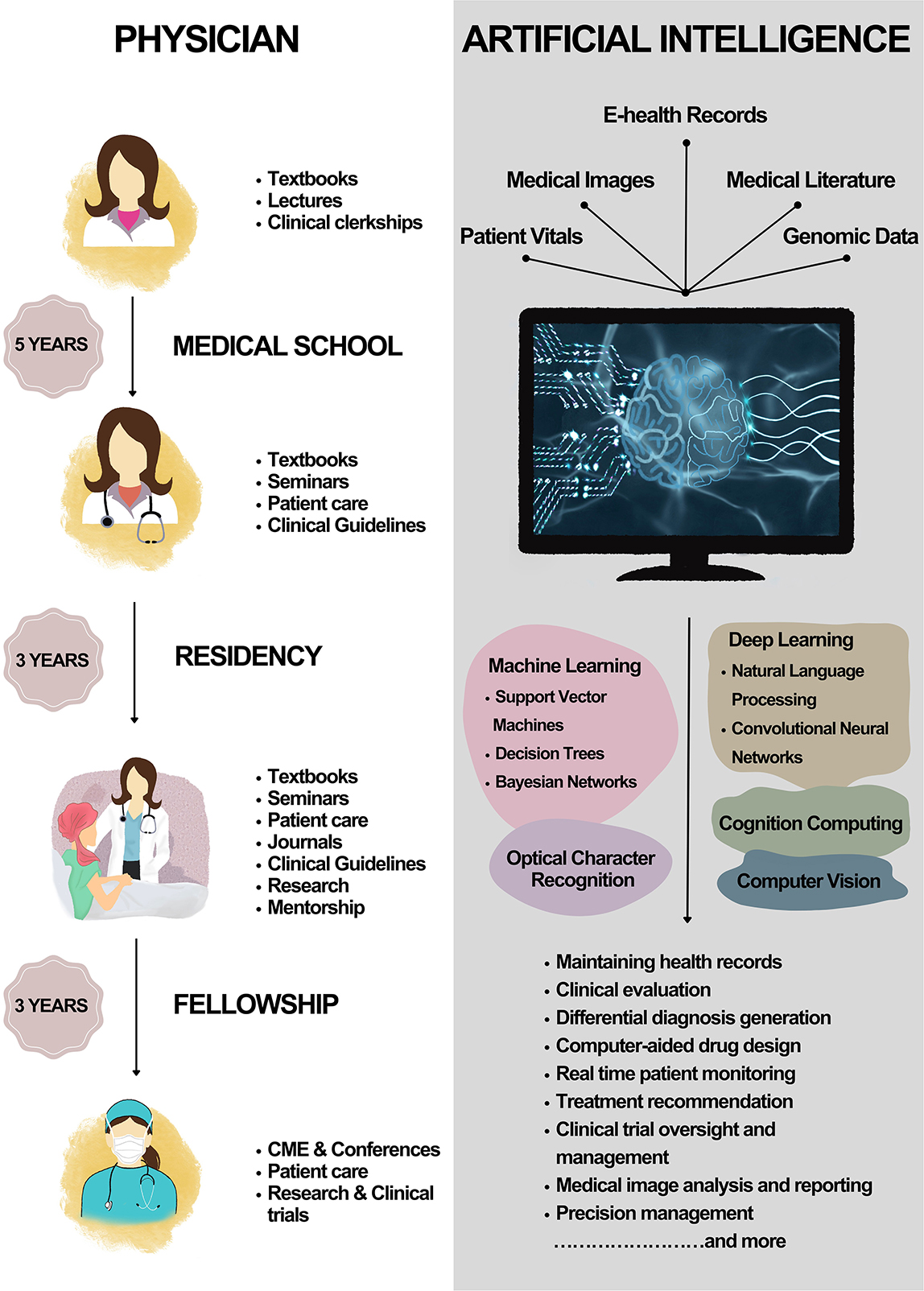

AI is set to transform healthcare. It will reduce documentation time. Enhance diagnoses. Save lives. Perhaps even replace physicians.

However, what I’ve learned after 11 years as a hospitalist is this: excitement without proof is hazardous. And AI—particularly in the realm of medicine—is not merely software. It constitutes treatment.

If we are to allow AI to affect critical life-or-death choices, it must adhere to the same standards as any clinical procedure. This necessitates thorough trials, clear design, and cultural compatibility. Anything less equates to malpractice.

We have encountered this before. Recall Theranos? An alluring promise, devoid of peer-reviewed validation, and the medical community’s worst-kept secret. It didn’t merely squander money—it jeopardized lives. If we approach AI in the same manner—deploying tools without proof, accountability, or ethics—we’re courting another catastrophe.

Clinical AI needs to be validated just like any medication or device. Randomized controlled trials are not optional—they are imperative. Dr. David Byrne describes this as the “secret sauce” for secure AI adoption, and he is correct. We would never allow a new chemotherapy to reach the market based solely on a compelling pitch and some retrospective information. So why are we doing this with algorithms?

Yet, it’s occurring. Tools are being introduced without explainability. Without an understanding of the data upon which they were trained. Without insight into how they’ll act in various populations. That’s not innovation—it’s negligence.

Doctors are not the foes of progress. However, we are skeptics for a purpose. Skepticism safeguards patients. It’s the reason we double-check vital signs, challenge assumptions, and question protocols that seem off. If we seem hesitant to embrace AI, it is not out of resistance. It is rooted in our memories of what transpires when systems overpromise and underdeliver.

This skepticism will only intensify if we persist in viewing physicians as obstacles to implementation rather than collaborators. If AI is to flourish in healthcare, it must be constructed around clinician trust. This begins with education. Our peers will not trust a tool they do not comprehend—nor should they.

We require AI literacy integrated into training programs, hospital onboarding, and executive dialogues. We need frameworks that ensure ethical and clinically sound growth—such as SPIRIT-AI and CONSORT-AI—incorporated into deployment strategies. Moreover, every leader must grasp that an AI rollout is not merely an IT initiative. It is a clinical intervention that warrants the same examination, the same rigor, and the same humility.

Just because something is novel does not guarantee its quality. In Silicon Valley, speed is valued. In healthcare, safety reigns. The tech industry experiments with ideas on users. We evaluate interventions on patients. A single flaw in a user interface may annoy a customer. A single error in healthcare can cost a life.

And here’s the true irony.

Doctors desire AI to succeed. We’re weary of cumbersome EMRs. We want our notes transcribed more swiftly, our patients alerted sooner, our discharge processes more streamlined. What we dread is poor change—change lacking evidence, implementation devoid of governance, and technology that adds rather than alleviates burdens.

We cannot afford to invest millions in flashy AI dashboards while our EHRs still obstruct fundamental care. Or to deploy “smart” triage tools while neglecting the bias in their training datasets. Before we launch AI-equipped ambulances, let’s ensure we can rely on the software that forecasts readmissions.

Doctors do not oppose innovation. We oppose carelessness.

That’s why it’s time to alter the narrative. AI is not merely an accessory—it is becoming an integral part of the care approach. And if we acknowledge that, then it must be assessed, regulated, and respected just like everything else we provide to our patients.

We need to begin treating AI as we would chemotherapy.

Not because it’s harmful—but because it’s potent. Because it demands precision, vigilance, and consent. Because it must be safe before scaling. And because the repercussions of getting it wrong are too significant.

AI isn’t the future of healthcare. It’s the present. But it will only thrive if we construct it on the principles that medicine was always intended to uphold: trust, truth, and evidence.

Rafael Rolon Rivera is an internal