Currently, numerous people are utilizing ChatGPT to condense intricate patient histories and create discharge summaries or clarify uncommon clinical concepts. At first, it feels enchanting. Nevertheless, after a few weeks, the familiar sensation of frustration resurfaces. Why does it continue to feel like additional work?

The problem lies not in the technology but in the fact that ChatGPT was not engineered for the intricate, multistep workflows typical of clinical medicine. This discrepancy is leading to a novel kind of weariness: prompt fatigue.

**A Tale of Two Mondays**

Think about Dr. Anya Sharma, an internist at City General Hospital. Her Monday morning unfolds like many others, as she copies and pastes raw information from the electronic health record (EHR) into ChatGPT. She prompts and re-prompts to highlight cardiac history while manually cross-referencing the chart to guarantee that the AI doesn’t overlook subtle ECG alterations. By the time her documentation is finalized, she feels mentally exhausted. She is no longer just a doctor; she’s now an AI operator.

Meanwhile, Dr. Ben Carter nearby has a different workflow. His “digital resident,” an autonomous AI fully embedded in the EHR, has been working continuously overnight. It synthesized overnight admissions, flagged high troponin levels, and queued guideline-recommended orders for examination.

Dr. Carter dedicates five minutes to reviewing and approving. He walks into his first patient’s room with a clear clinical understanding, prepared to concentrate on patient care. The contrast lies not in intelligence but in agency.

**The Hidden Cost of the “Operator” Model**

ChatGPT functions as a passive knowledge engine. It awaits a prompt and responds, compelling physicians to oversee every input and output: what data is submitted, how it’s presented, and whether the output holds accuracy.

Instead of alleviating cognitive load, physicians find themselves micromanaging a brilliant, overly literal intern. They didn’t enter the field of medicine to become prompt engineers.

**What Agentic AI Changes**

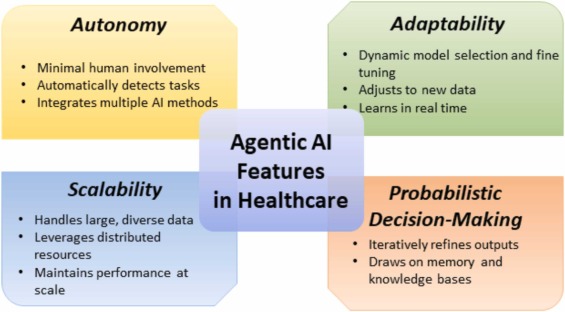

Agentic AI signifies a profound design transformation. Unlike passive models, agentic systems can independently deconstruct complex tasks into intermediate steps and carry them out autonomously within set parameters. Essential features include:

– **Tool use and API integration:** These systems go beyond merely generating text; they engage directly with EHRs, laboratory systems, and medical literature.

– **Memory and context awareness:** They retain a continuous understanding of a patient’s history alongside current workflow goals.

– **Self-monitoring:** They detect inconsistencies or errors and refine output before it reaches a physician.

This shifts AI from being a conversational assistant to an active contributor in clinical tasks.

**From Co-Pilot to Clinical Supervisor**

In the agentic framework, the AI is not a “co-pilot” working alongside you. It functions as a digital team member executing tasks independently. The procedure is straightforward: Agent proposes. Human approves.

A physician assigns a task (for instance, drafting a progress note, reviewing lab results, identifying drug interactions). The agent operates in the background and presents findings for confirmation. The physician retains full responsibility but is no longer bogged down by clerical details, transitioning from an operator to a high-level overseer.

**Why This Reduces Burnout (Not Just Time)**

The time savings are significant. Initial uses of agentic AI have saved healthcare systems over 15,000 documentation hours within a year. However, the true advantage lies in cognitive relief.

When clerical duties do not overwhelm us, mental energy can be allocated to decision-making, patient interactions, and judgment. More than 80 percent of physicians utilizing these tools report enhanced job satisfaction.

**The Guardrails of the Digital Ward**

Agentic AI supplements rather than replaces clinical judgment. Clear boundaries exist where it must not function independently:

– **Live resuscitations:** Code Blue situations necessitate immediate human decision-making.

– **High-risk medications:** The final authority in prescribing stays with clinicians.

– **Compassionate communication:** Delivering adverse news remains inherently human.

– **Final diagnosis:** AI can suggest differentials, but physicians finalize the plan.

Human oversight is essential.

**The Bottom Line**

Transitioning to agentic AI necessitates EHR vendors opening APIs, hospitals establishing liability frameworks, and clinicians acquiring new supervisory skills.

The message for physicians is clear: Agentic AI revolves around delegation, not conversation. The future of medicine is not about more efficiently conversing with AI but about wisely leading a digital team.