Last week, I dedicated more than two hours to a prior authorization for a patient requiring a medication she had been stable on for years. Two hours filled with hold music, calls being transferred, and forms sent via fax, all while patients were left waiting.

In that same week, Anthropic revealed Claude for Healthcare: an AI solution capable of verifying coverage requirements, generating claims appeals, and expediting prior authorizations within minutes. Just days before, OpenAI launched ChatGPT Health. Both technologies aim to free physicians from the administrative struggles that are overwhelming us.

My initial reaction was one of relief. My second thought led to a critical question every physician must consider: What will occur when AI surpasses our ability to navigate healthcare systems?

The administrative strain is overwhelming us

Let’s face the reality. Physicians invest nearly two hours in administrative tasks for each hour of direct patient care. Prior authorizations alone take up an estimated 34 hours per week for some specialists. This is not practicing medicine; it’s just handling paperwork with a stethoscope.

Claude for Healthcare precisely addresses this issue:

– Integration with the CMS coverage database for real-time verification.

– ICD-10 lookups to ensure coding precision.

– Automatic generation of claims appeals accompanied by supporting documentation.

– Access to PubMed’s 35 million articles for clinical decisions.

Commure, one of Anthropic’s partners in healthcare, estimates that Claude could “save clinicians millions of hours each year.” I believe that. The bigger question is how we will utilize those hours and what we may sacrifice along the way.

What Claude actually does

In contrast with the AI chatbots that patients have utilized for years, Claude for Healthcare integrates directly with the very framework of medicine itself.

For us:

– Confirms prior authorization requirements before submission.

– Prepares appeals featuring relevant clinical data.

– Searches through medical literature in seconds rather than hours.

– Connects with EHR systems adhering to FHIR standards.

For patients:

– Converts lab results and medical reports into understandable terms.

– Links to Apple Health and Android Health Connect for wellness information.

– Enables record sharing through HealthEx and Function connectors.

The allure is captivating: A scenario where the two hours I spent on that prior authorization turns into just two minutes, allowing me to truly practice medicine.

The competition is on

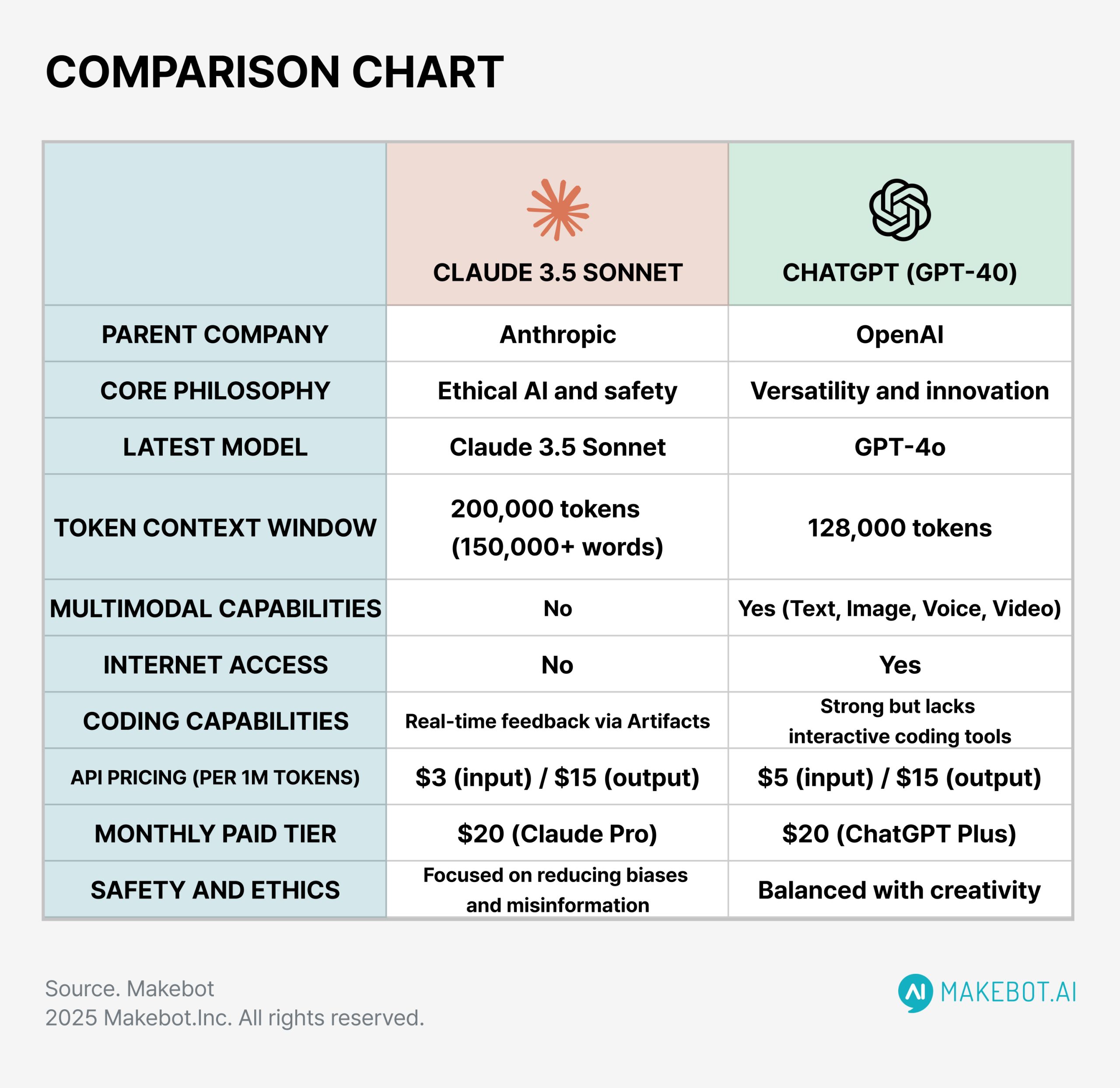

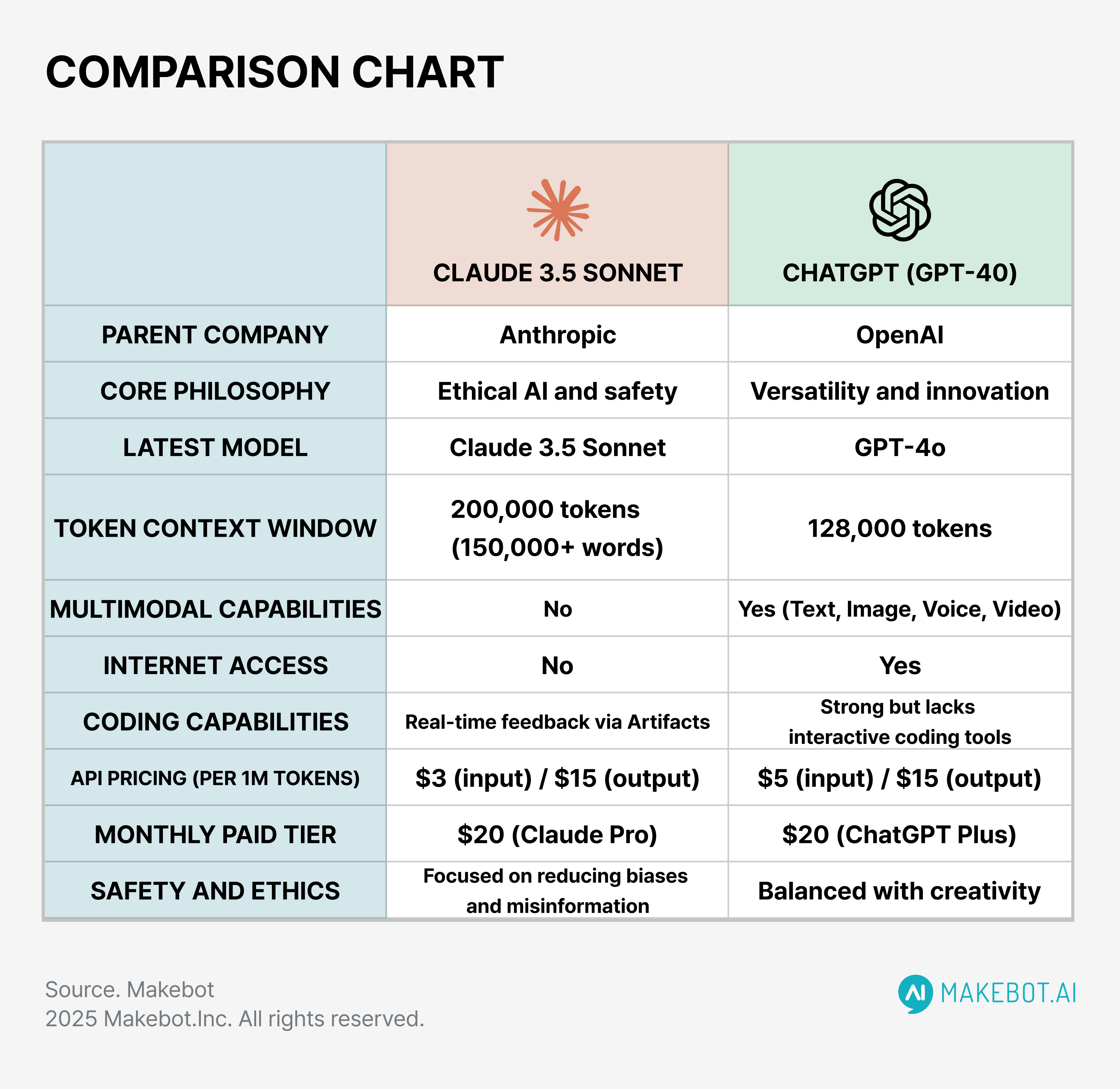

Anthropic and OpenAI are now in direct competition for supremacy in healthcare. OpenAI claims 800 million weekly users and leads in consumer AI; ChatGPT Health broadens its influence into personal wellness. Anthropic excels in enterprise uptake, with Claude already aiding over 4,400 healthcare organizations through partners like Commure.

Sanofi claims that “Claude is crucial to Sanofi’s AI transformation and is utilized daily by most Sanofians.” This is no longer experimental. It serves as essential infrastructure.

Meanwhile, the FDA is relaxing regulations concerning clinical decision support software. Products that provide singular recommendations can now sidestep FDA reviews if they meet specific criteria. With technology advancing rapidly, the regulatory barriers are coming down.

The trust dilemma

Both companies stress safety: Health data will not be utilized for AI training, users can revoke permissions at any moment, and Claude features disclaimers directing users to healthcare professionals. Anthropic mandates qualified professional review for care decisions.

However, let’s be candid: AI systems can fabricate information. They produce confidently presented inaccuracies. They do not possess the clinical understanding derived from years of patient observation, family interactions, and learning from errors.

An Anthropic representative admitted these systems “can make mistakes and should not substitute professional judgment.” That’s comforting, until a patient arrives believing their AI chatbot has made an accurate diagnosis, leaving us to explain why it hasn’t.

What concerns me

My worry isn’t that AI will take over physicians’ roles. It’s that AI could be leveraged to justify replacing the conditions that enable quality medicine.

If Claude can manage prior authorizations in two minutes, will administrators expect us to increase our patient load? If AI can instantly summarize charts, will reimbursement for documentation time be eliminated? If patients can receive “answers” from chatbots, will insurers argue that we are unnecessary?

Technology itself is neutral. The frameworks that implement it are not.

What I’m cautiously hopeful for

Despite my concerns, I perceive genuine promise:

– Democratizing medical knowledge: Billions are without access to physicians. AI that explains lab results in simple terms could significantly transform the lives of underserved communities.

– Alleviating burnout: If AI takes care of the administrative burden that drives physicians from the field, we may actually remain in practice.

– Accelerating research: Claude’s life science tools could facilitate quicker access to medications for patients through improved trial design and regulatory processes.

– Reducing information asymmetry: Patients who comprehend their conditions become active participants in their care, instead of passive recipients.

The crucial word is “could.” Whether these advantages actually occur hinges on how health systems, insurers, and regulators choose to implement the technology.

What physicians should do now

This technology isn’t forthcoming; it’s already available. Our decision is whether to engage thoughtfully with it or let others dictate how it shapes our profession.

Critically assess AI outputs. Halluc