The Bay Area has emerged as the hub of artificial intelligence, hosting esteemed startups and laboratories competing to demonstrate AI’s capability to revolutionize our everyday experiences.

Just a few months back, California unveiled its own Frontier AI Policy Report, initiated by Governor Gavin Newsom, which cautioned that without heightened oversight and transparency, AI models pose risks to equity and accountability, particularly in health care. This threat is most pressing in dermatology.

A short while ago, I encountered this disparity firsthand while searching online to identify the peculiar rashes on my skin and the reasons behind their worsening condition. As I anxiously browsed through numerous images, I seldom found any that matched my Southeast Asian skin tone. My frustration grew as I realized that the resources I relied upon inadequately represented patients like me.

However, what I encountered online was merely a glimpse of a far more significant problem: Dermatology as a discipline does not fairly represent all skin tones. This becomes particularly perilous in AI tools, which are gaining traction in the health care sector.

AI is revolutionizing how we recognize, assess, and treat skin conditions. Modern applications can identify skin issues almost instantaneously. Machine learning technologies can also aid dermatologists in verifying diagnoses for patients or recognizing rare or complicated diseases. Yet, herein lies the challenge: Most AI models in dermatology are predominantly trained on images of lighter skin. As a result, AI tools frequently misinterpret or entirely overlook conditions on darker skin.

Many individuals depend on these technologies. They offer access to patients who typically struggle to consult a specialist. In rural settings or among uninsured populations, mobile applications and teledermatology are frequently the sole available resources. I have used them myself, particularly during times when dermatologist appointments were weeks away. However, due to skin bias, these tools do not enhance access to care as intended. Instead, they exclude those who require help the most. In the Bay Area, where communities of color constitute the majority, the insufficient representation in dermatology imagery has alarming repercussions for diagnosis and treatment. Even in clinics, approximately 12 percent of dermatologists already report utilizing AI tools to assist in care. Thus, when these tools do not function equitably, they risk misguiding providers and jeopardizing patients’ lives.

For AI to genuinely enhance dermatologic care across the nation, both patients and providers need a clearer insight into how these tools are developed. This is why transparency is crucial. Companies ought to openly disclose how their tools are trained and how they perform among diverse populations. Such transparency should be a prerequisite before providers and patients can begin to trust and rely on these technologies.

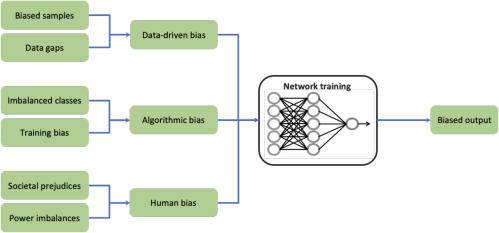

The bias evident in dermatology AI stems directly from the data provided to these tools. Therefore, addressing this issue begins with the creation of improved datasets. The Bay Area, with its top-tier tech firms, holds a significant opportunity to take the lead in developing dermatology image collections that represent not only California’s diversity but that of the world. These libraries should encompass skin of every shade, reflecting all races and ethnic backgrounds. Whenever feasible, this data should be open and accessible to promote better research and fairer tools.

California’s Frontier AI Policy Report advocated for such accountability. If the state is at the forefront of frontier AI, it should also spearhead equitable medical AI, beginning with the Bay Area.

The scarcity of images that represented my skin was both frustrating and delayed my treatment. For many others, this could lead to a missed diagnosis, which can sometimes be critical.

AI will undoubtedly continue to play an expanding role in how we identify and treat skin conditions within health care. The pressing question now is not whether these tools will be utilized, but if they will be crafted to fairly serve everyone. This necessitates deliberate decisions regarding data, testing, and accountability. The Bay Area has the opportunity to establish the benchmark.

Alex Siauw is a patient advocate.